duration <- read_csv("data/coretta2018/token-measures.csv") |>

rename(

c2_voicing = c2_phonation

) |>

mutate(

c2_voicing = factor(c2_voicing, levels = c("voiceless", "voiced")),

vowel = factor(vowel, levels = c("a", "o", "u")),

c2_place = factor(c2_place, levels = c("coronal", "velar"))

) |>

drop_na(v1_duration)

contrasts(duration$vowel) <- "contr.sum"Including group-level effects in brms

University of Edinburgh

Coretta 2018

Data from my PhD dissertation.

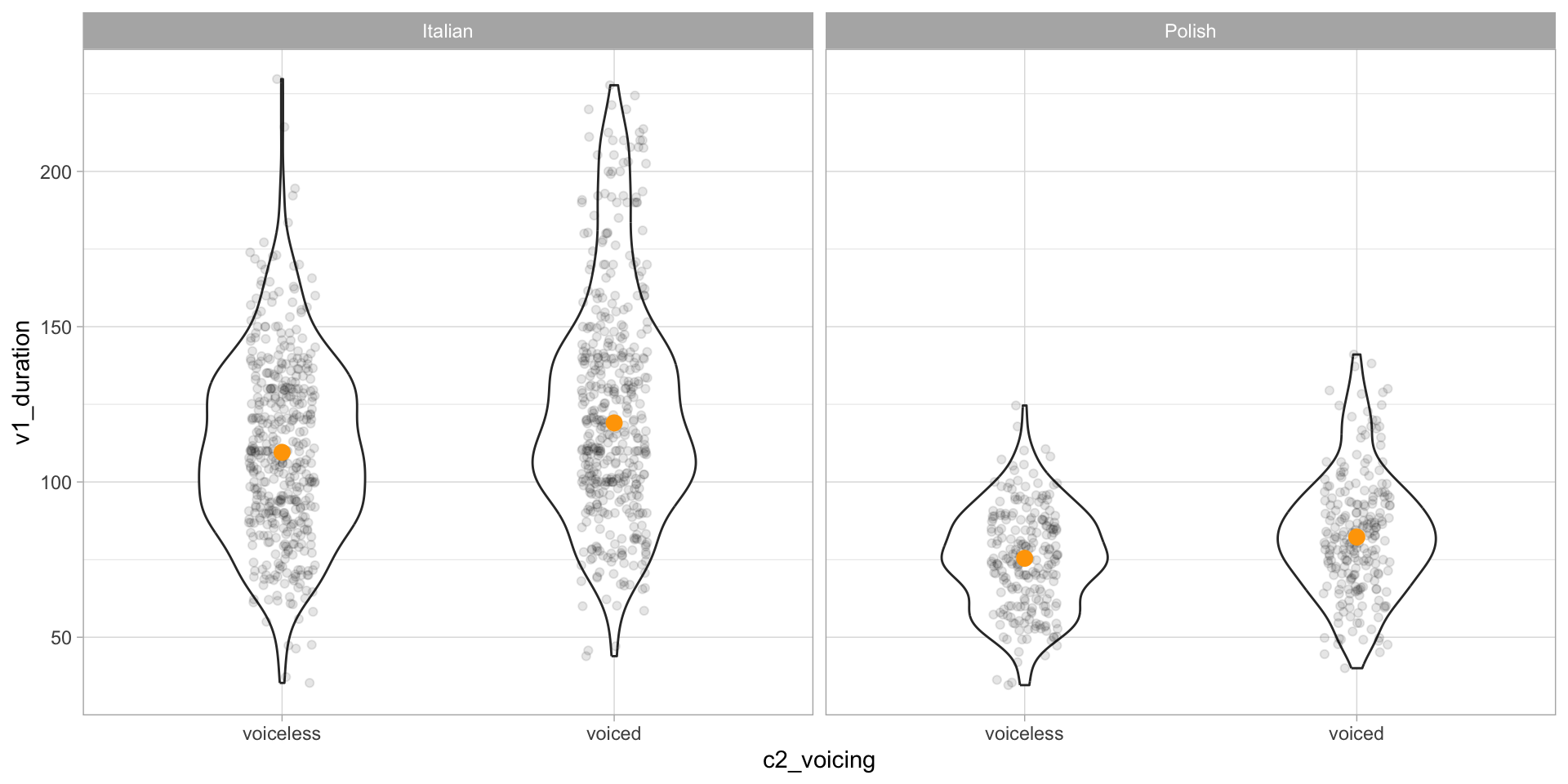

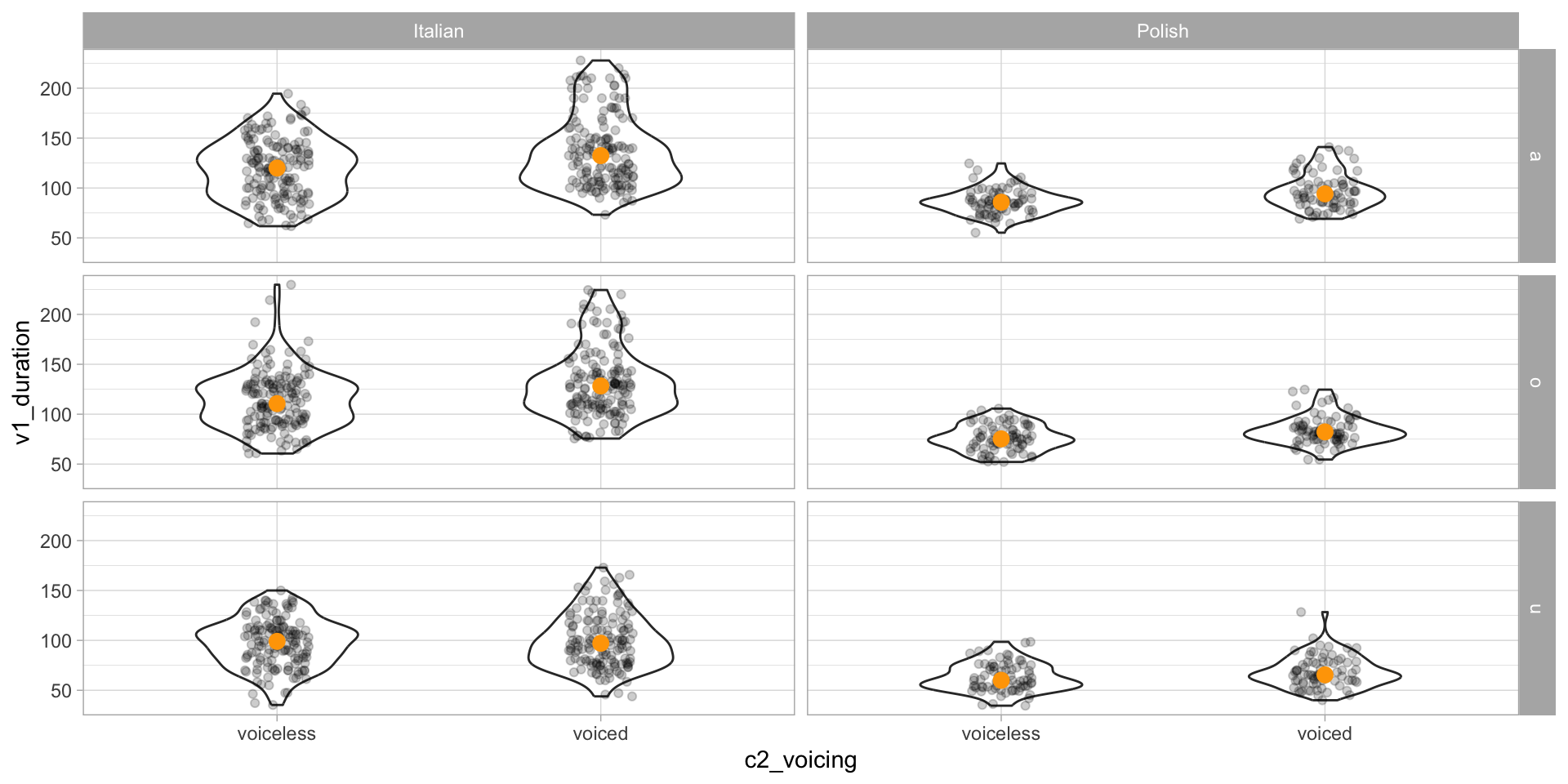

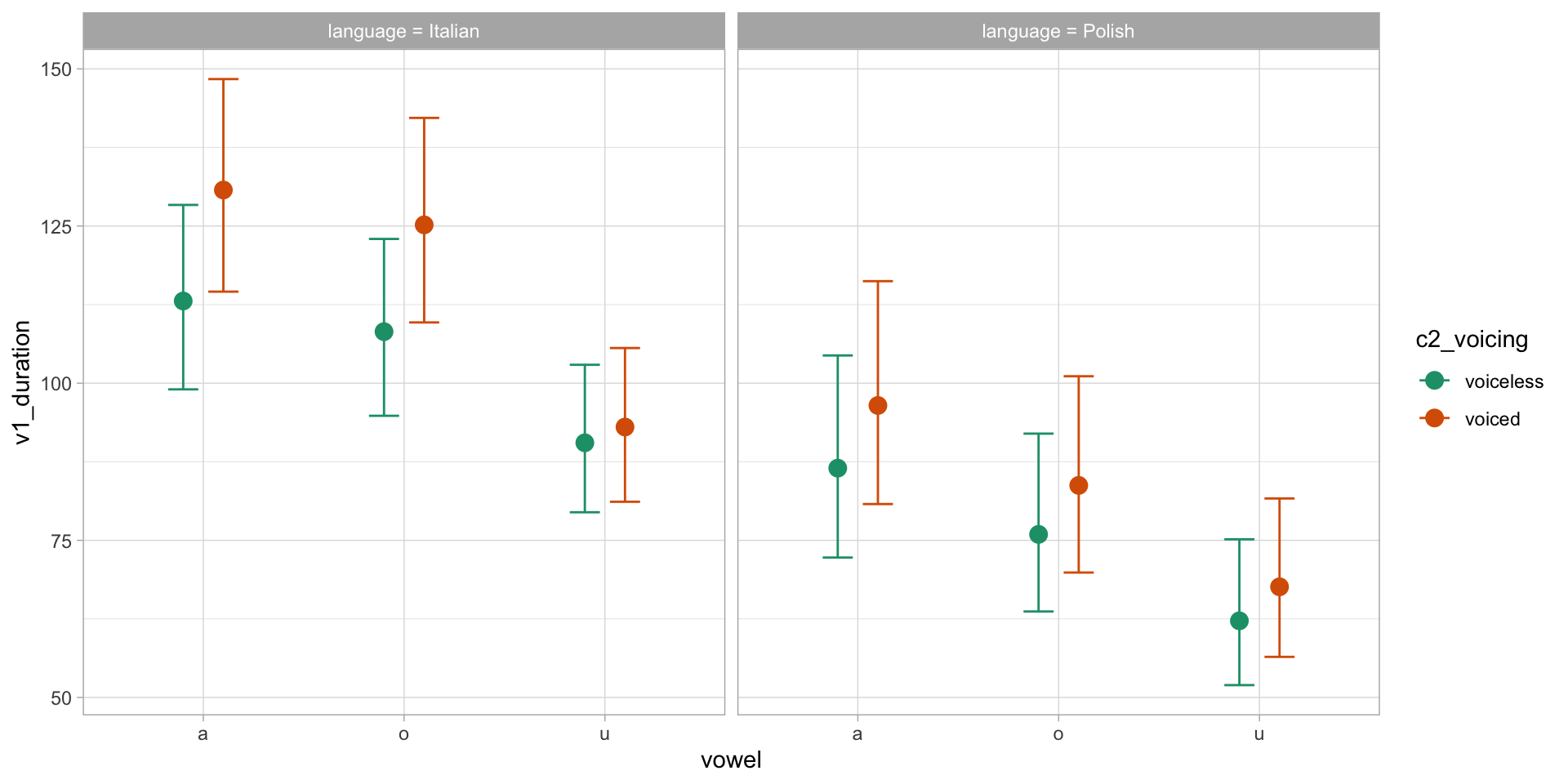

- Voicing effect: vowels tend to be longer when followed by voiced consonants and shorter when followed by voiceless consonants.

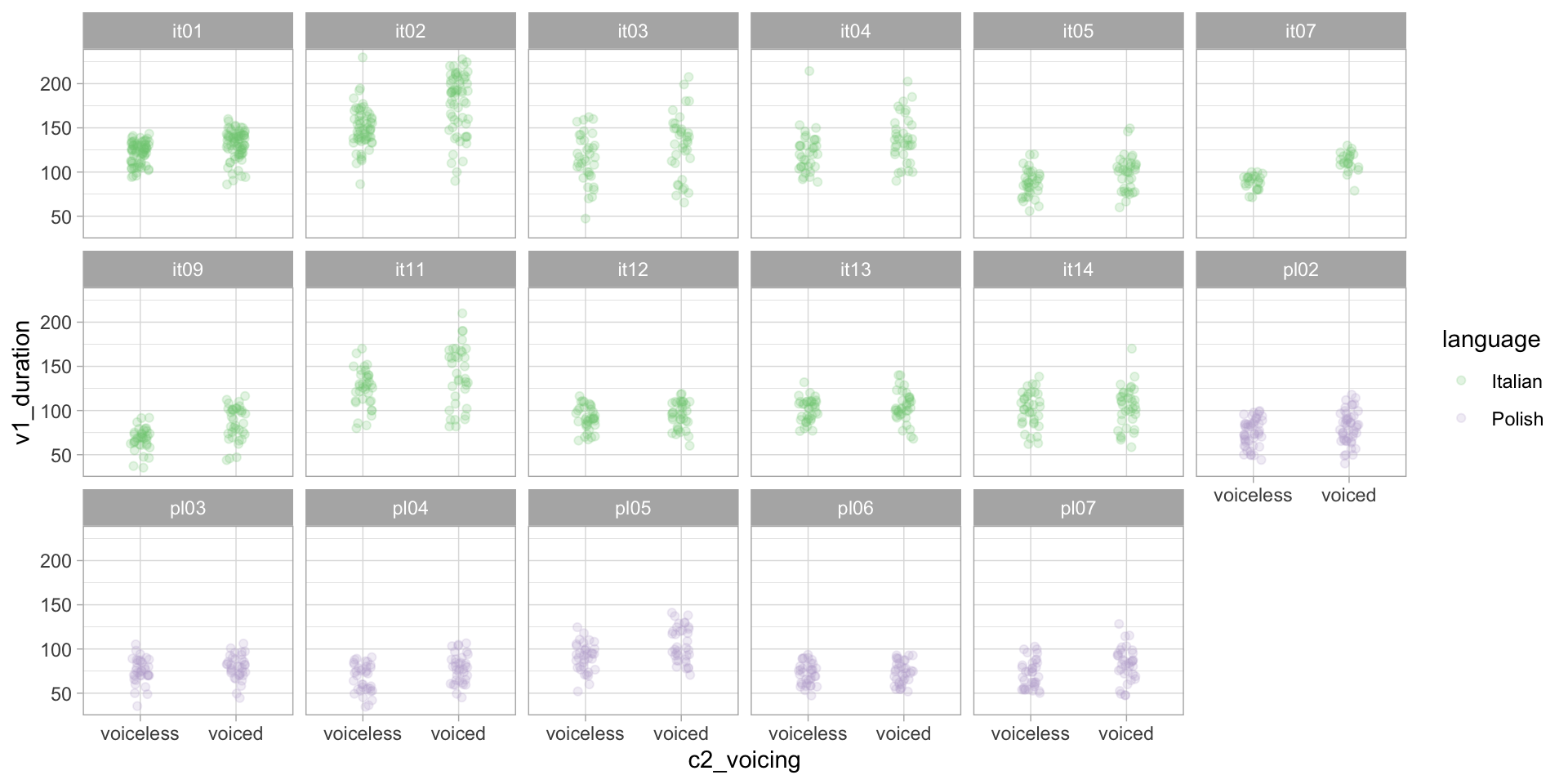

Effect of stop voicing on the duration of preceding vowels in Italian and Polish.

/pVCV/ words in frame sentence.

- C is one of /t, d, k, ɡ/.

- V is /a, o, u/.

Read the data

The tibble duration

# A tibble: 1,342 × 5

speaker word vowel c2_voicing v1_duration

<chr> <chr> <fct> <fct> <dbl>

1 it01 pugu u voiced 95.2

2 it01 pada a voiced 139.

3 it01 poco o voiceless 127.

4 it01 pata a voiceless 127.

5 it01 boco o voiceless 132.

6 it01 podo o voiced 125.

7 it01 boto o voiceless 134.

8 it01 paca a voiceless 119.

9 it01 bodo o voiced 130.

10 it01 pucu u voiceless 99.1

# ℹ 1,332 more rowsVowel duration by C2 voicing

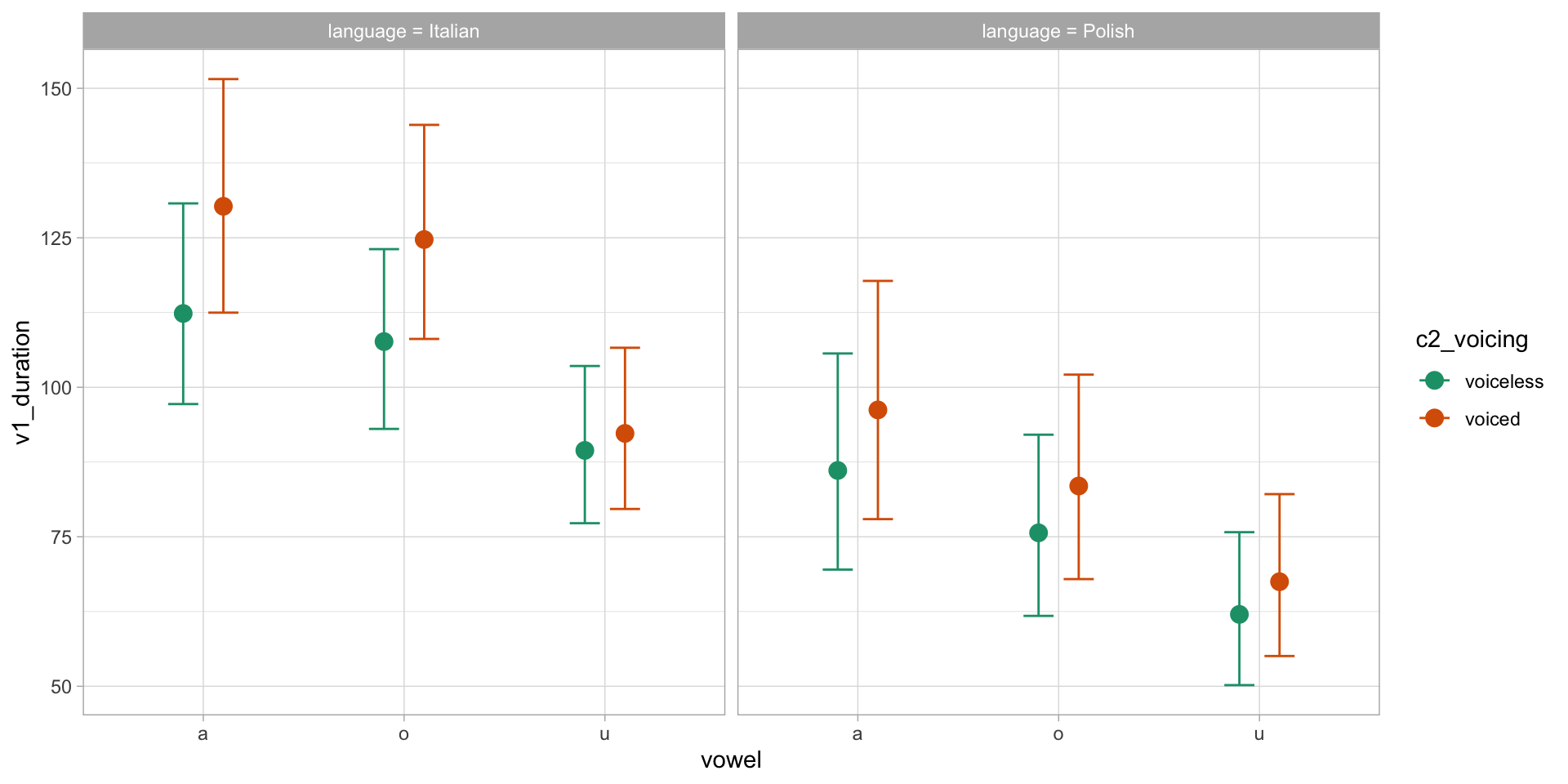

Vowel duration by C2 voicing and vowel

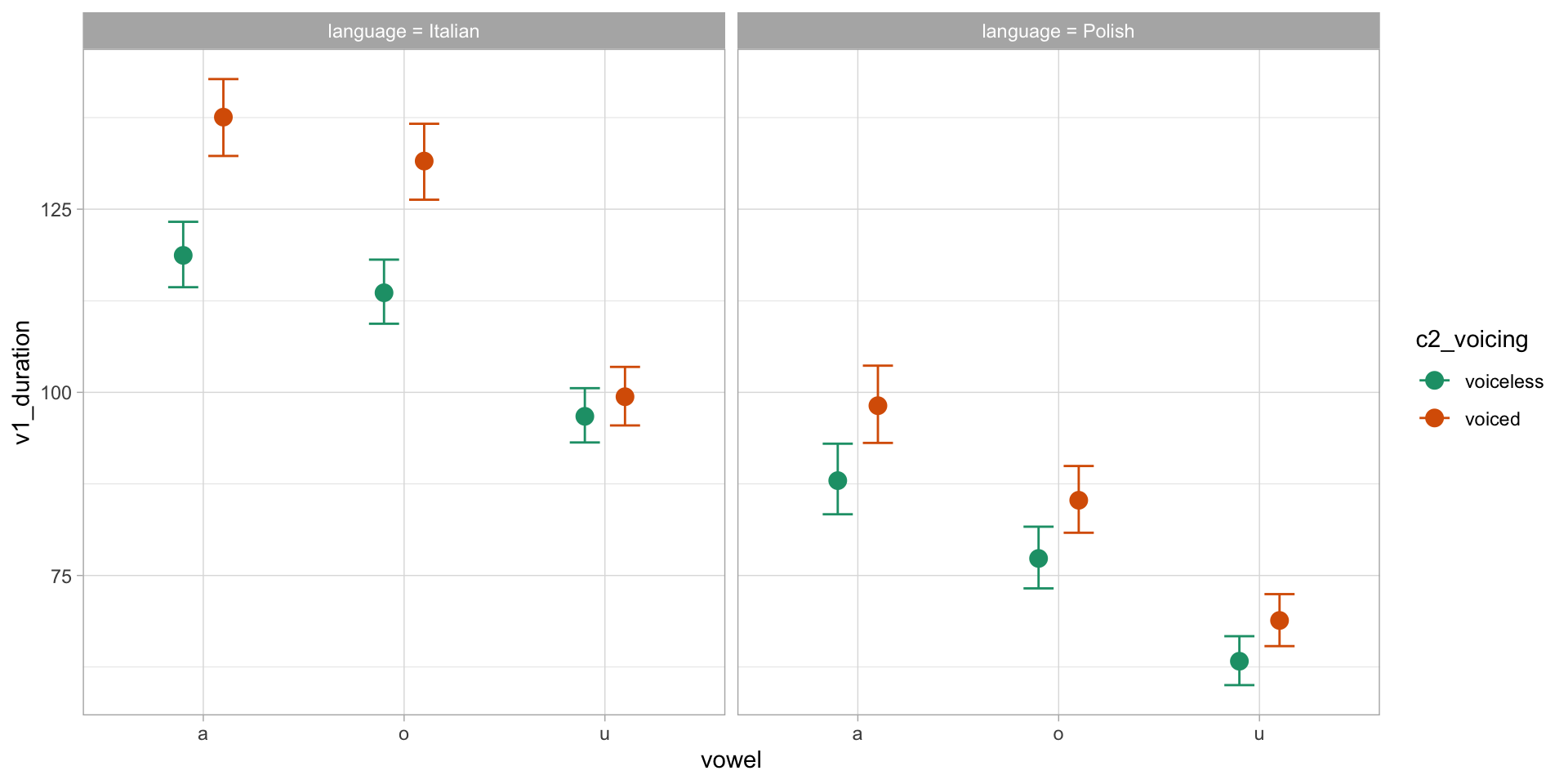

Bayesian linear model of vowel duration

Bayesian linear model of vowel duration: summary

Family: lognormal

Links: mu = identity; sigma = identity

Formula: v1_duration ~ c2_voicing * vowel * language

Data: duration (Number of observations: 1342)

Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

total post-warmup draws = 4000

Regression Coefficients:

Estimate Est.Error l-90% CI u-90% CI

Intercept 4.67 0.01 4.65 4.68

c2_voicingvoiced 0.11 0.02 0.08 0.13

vowel1 0.08 0.02 0.06 0.11

vowel2 0.04 0.02 0.01 0.07

languagePolish -0.37 0.02 -0.40 -0.34

c2_voicingvoiced:vowel1 0.04 0.02 0.00 0.08

c2_voicingvoiced:vowel2 0.04 0.02 0.00 0.08

c2_voicingvoiced:languagePolish -0.01 0.03 -0.06 0.04

vowel1:languagePolish 0.07 0.03 0.03 0.11

vowel2:languagePolish -0.02 0.03 -0.06 0.03

c2_voicingvoiced:vowel1:languagePolish -0.03 0.04 -0.09 0.03

c2_voicingvoiced:vowel2:languagePolish -0.04 0.04 -0.10 0.02

Rhat Bulk_ESS Tail_ESS

Intercept 1.00 3672 3397

c2_voicingvoiced 1.00 3623 2981

vowel1 1.00 2170 2941

vowel2 1.00 2112 2529

languagePolish 1.00 3020 3149

c2_voicingvoiced:vowel1 1.00 2040 2687

c2_voicingvoiced:vowel2 1.00 2232 2625

c2_voicingvoiced:languagePolish 1.00 2506 2788

vowel1:languagePolish 1.00 2119 2923

vowel2:languagePolish 1.00 2011 2814

c2_voicingvoiced:vowel1:languagePolish 1.00 2077 2716

c2_voicingvoiced:vowel2:languagePolish 1.00 2172 2574

Further Distributional Parameters:

Estimate Est.Error l-90% CI u-90% CI Rhat Bulk_ESS Tail_ESS

sigma 0.24 0.00 0.23 0.25 1.00 5636 3023

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).Conditional predictions of vowel duration

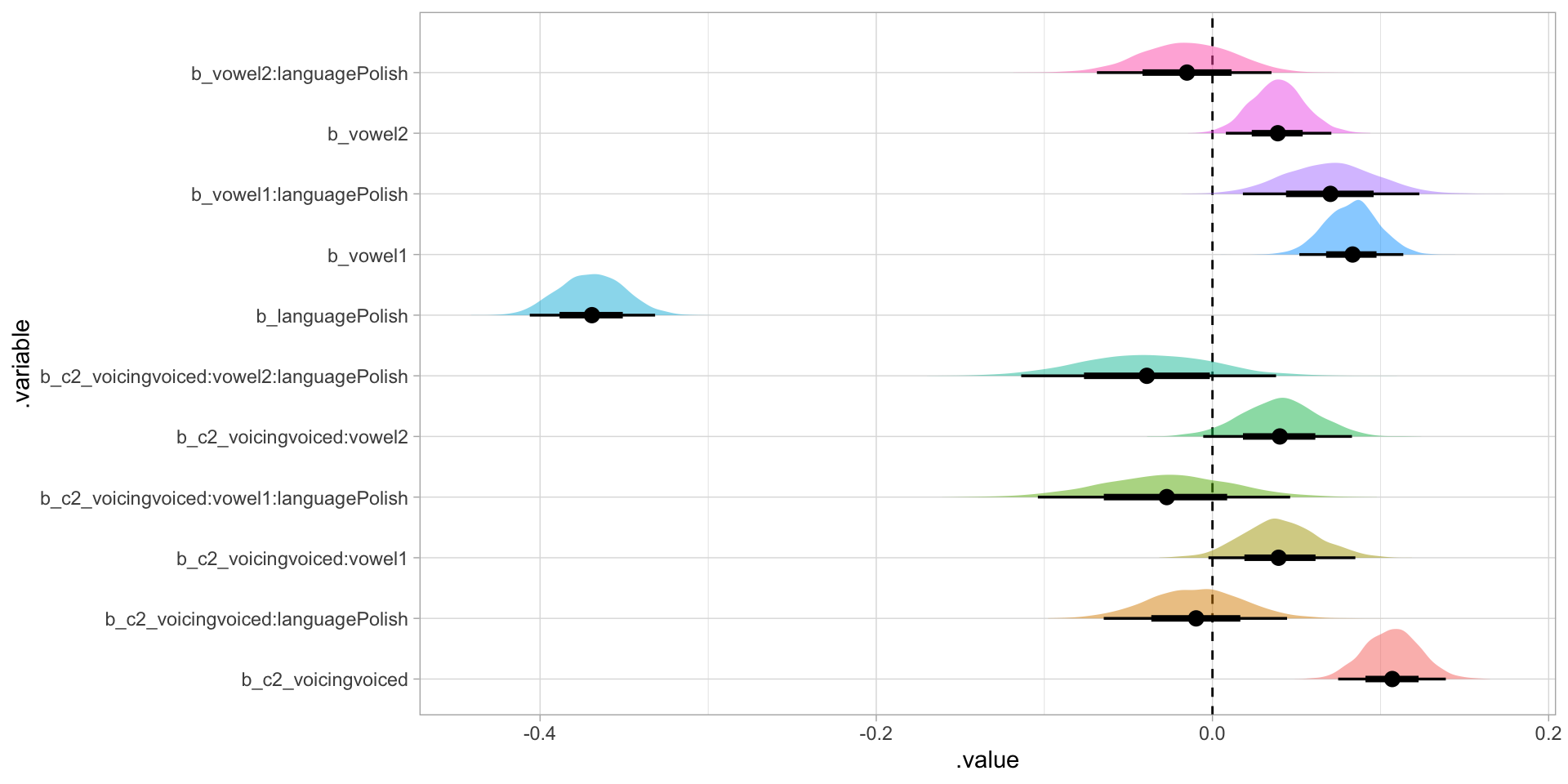

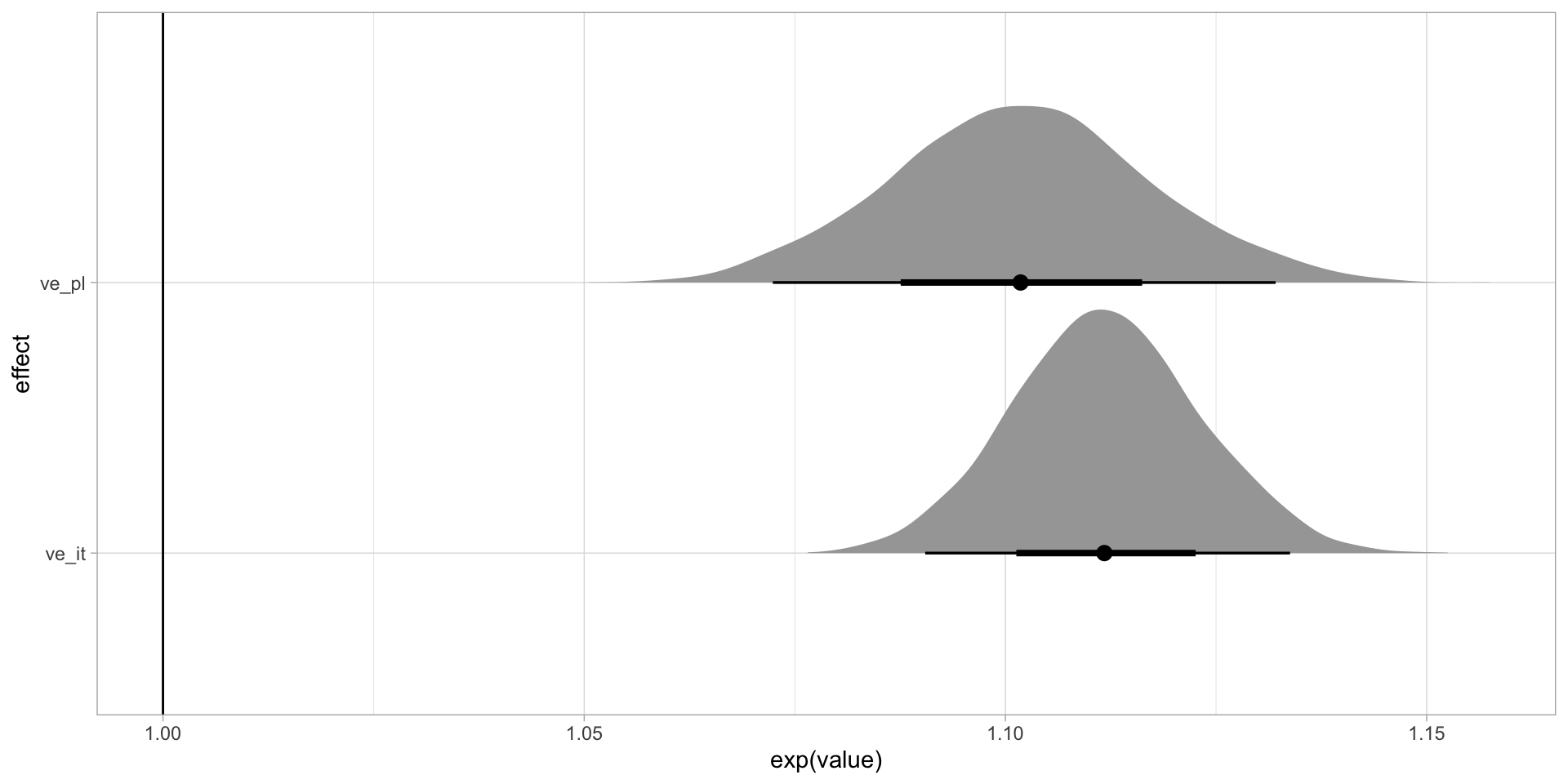

Posterior probability distributions of coefficients

Spread draws

# A tibble: 4,000 × 15

.chain .iteration .draw b_Intercept b_c2_voicingvoiced b_vowel1 b_vowel2

<int> <int> <int> <dbl> <dbl> <dbl> <dbl>

1 1 1 1 4.67 0.109 0.0840 0.0156

2 1 2 2 4.66 0.118 0.0875 0.0526

3 1 3 3 4.65 0.112 0.0704 0.0437

4 1 4 4 4.66 0.118 0.0505 0.0371

5 1 5 5 4.68 0.0861 0.0997 0.0251

6 1 6 6 4.66 0.0953 0.0659 0.0536

7 1 7 7 4.64 0.132 0.0774 0.0588

8 1 8 8 4.65 0.119 0.0753 0.0502

9 1 9 9 4.67 0.110 0.103 0.0285

10 1 10 10 4.68 0.0929 0.0928 0.0378

# ℹ 3,990 more rows

# ℹ 8 more variables: b_languagePolish <dbl>,

# `b_c2_voicingvoiced:vowel1` <dbl>, `b_c2_voicingvoiced:vowel2` <dbl>,

# `b_c2_voicingvoiced:languagePolish` <dbl>, `b_vowel1:languagePolish` <dbl>,

# `b_vowel2:languagePolish` <dbl>,

# `b_c2_voicingvoiced:vowel1:languagePolish` <dbl>,

# `b_c2_voicingvoiced:vowel2:languagePolish` <dbl>Posterior probability distributions of effect of voicing

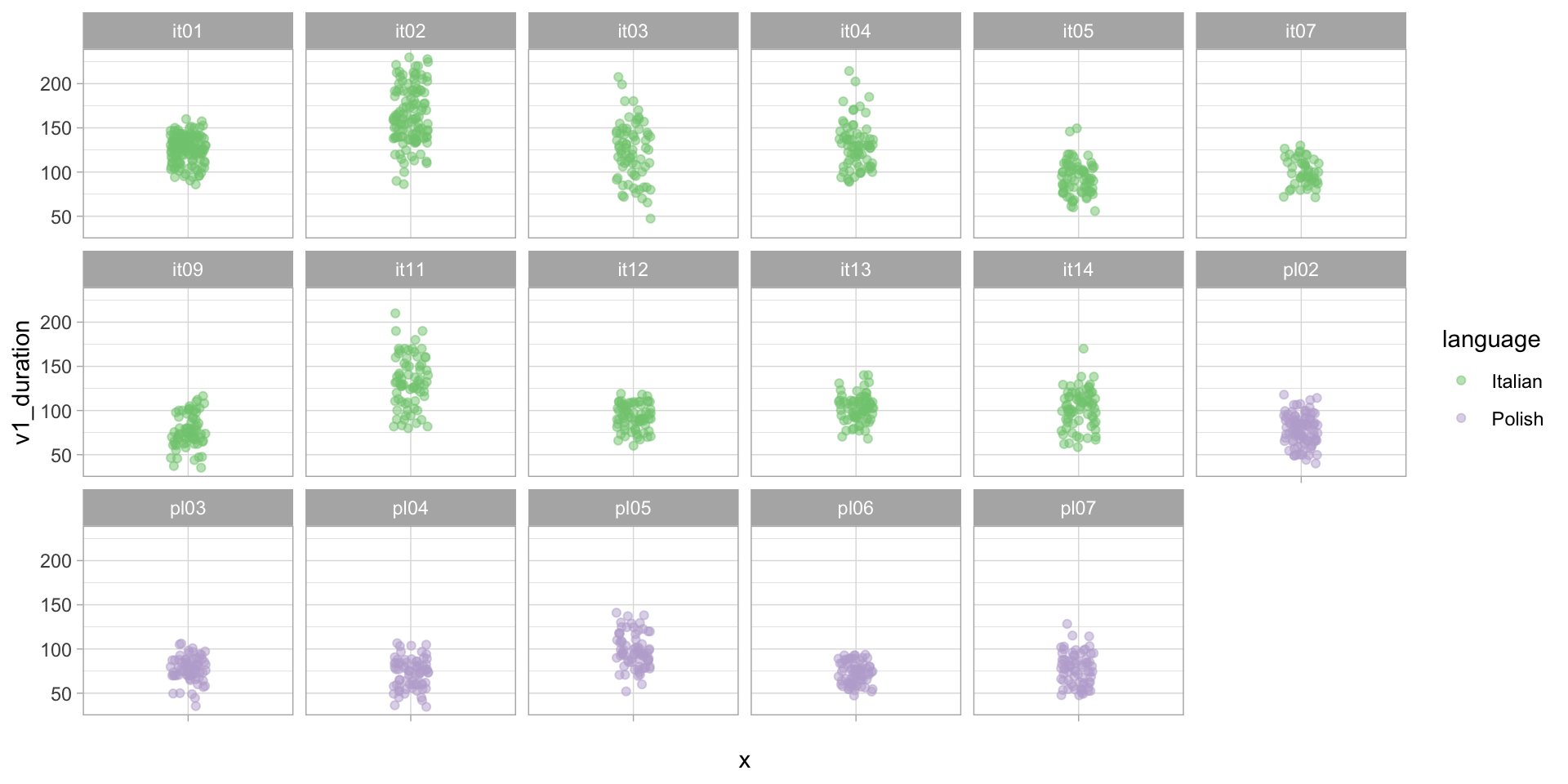

Vowel duration by speaker

Random effects are not random

Terminology is unhelpful:

Random effect, multilevel (hyper)parameters, group-level effects, varying effects.

As in opposition to fixed effects, population-level effects.

Mixed-effects models, random-effects models, random and fixed-effects models, multilevel models, nested models, hierarchical models…

Definitions are unhelpful:

- At least five different definitions.

Grouping in the observations not covered by the population-level effects.

- Related to (in)dependence between observations.

Common variables that are included in models as group-level effects are participant/speaker, item/word.

- Note that some variables could be entered as group-level effects in some cases but not others. It depends on the study design.

Group-level intercepts

Group-level intercepts: summary

Family: lognormal

Links: mu = identity; sigma = identity

Formula: v1_duration ~ c2_voicing * vowel * language + (1 | speaker)

Data: duration (Number of observations: 1342)

Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

total post-warmup draws = 4000

Multilevel Hyperparameters:

~speaker (Number of levels: 17)

Estimate Est.Error l-90% CI u-90% CI Rhat Bulk_ESS Tail_ESS

sd(Intercept) 0.21 0.04 0.15 0.29 1.01 959 1605

Regression Coefficients:

Estimate Est.Error l-90% CI u-90% CI

Intercept 4.63 0.07 4.52 4.73

c2_voicingvoiced 0.11 0.01 0.09 0.12

vowel1 0.09 0.01 0.07 0.11

vowel2 0.04 0.01 0.03 0.06

languagePolish -0.33 0.11 -0.52 -0.14

c2_voicingvoiced:vowel1 0.04 0.01 0.02 0.06

c2_voicingvoiced:vowel2 0.04 0.01 0.02 0.06

c2_voicingvoiced:languagePolish -0.01 0.02 -0.04 0.02

vowel1:languagePolish 0.06 0.02 0.03 0.09

vowel2:languagePolish -0.02 0.02 -0.05 0.01

c2_voicingvoiced:vowel1:languagePolish -0.03 0.02 -0.07 0.01

c2_voicingvoiced:vowel2:languagePolish -0.04 0.02 -0.08 0.00

Rhat Bulk_ESS Tail_ESS

Intercept 1.00 1060 1393

c2_voicingvoiced 1.00 4434 3184

vowel1 1.00 3416 2838

vowel2 1.00 3307 3094

languagePolish 1.00 1200 1489

c2_voicingvoiced:vowel1 1.00 3185 2918

c2_voicingvoiced:vowel2 1.00 2981 3041

c2_voicingvoiced:languagePolish 1.00 4238 2869

vowel1:languagePolish 1.00 3288 3188

vowel2:languagePolish 1.00 3102 2711

c2_voicingvoiced:vowel1:languagePolish 1.00 3068 3039

c2_voicingvoiced:vowel2:languagePolish 1.00 3183 2408

Further Distributional Parameters:

Estimate Est.Error l-90% CI u-90% CI Rhat Bulk_ESS Tail_ESS

sigma 0.15 0.00 0.14 0.15 1.00 4784 2453

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).Conditional predictions of vowel duration

Posterior probability distributions of effect of voicing

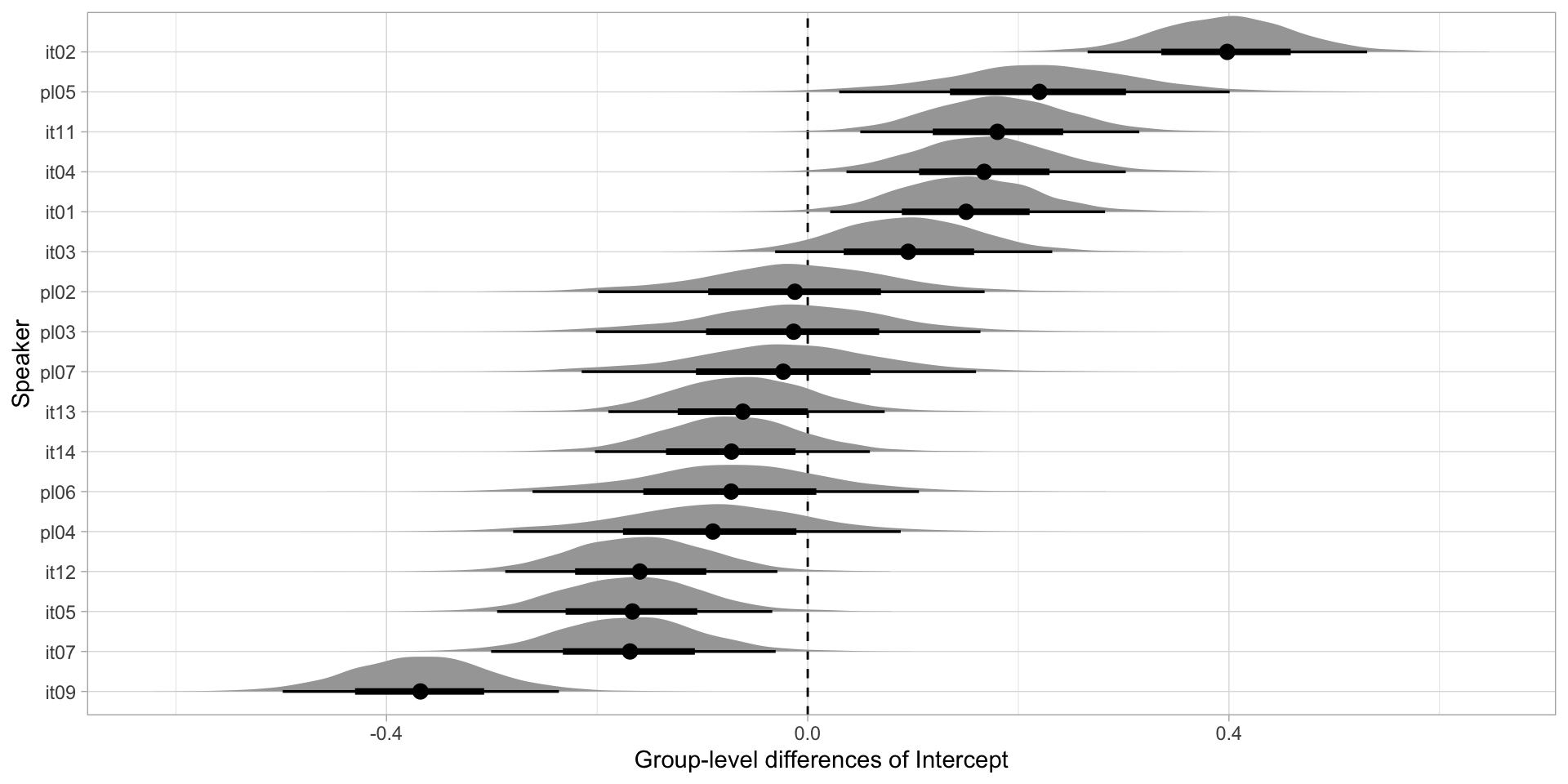

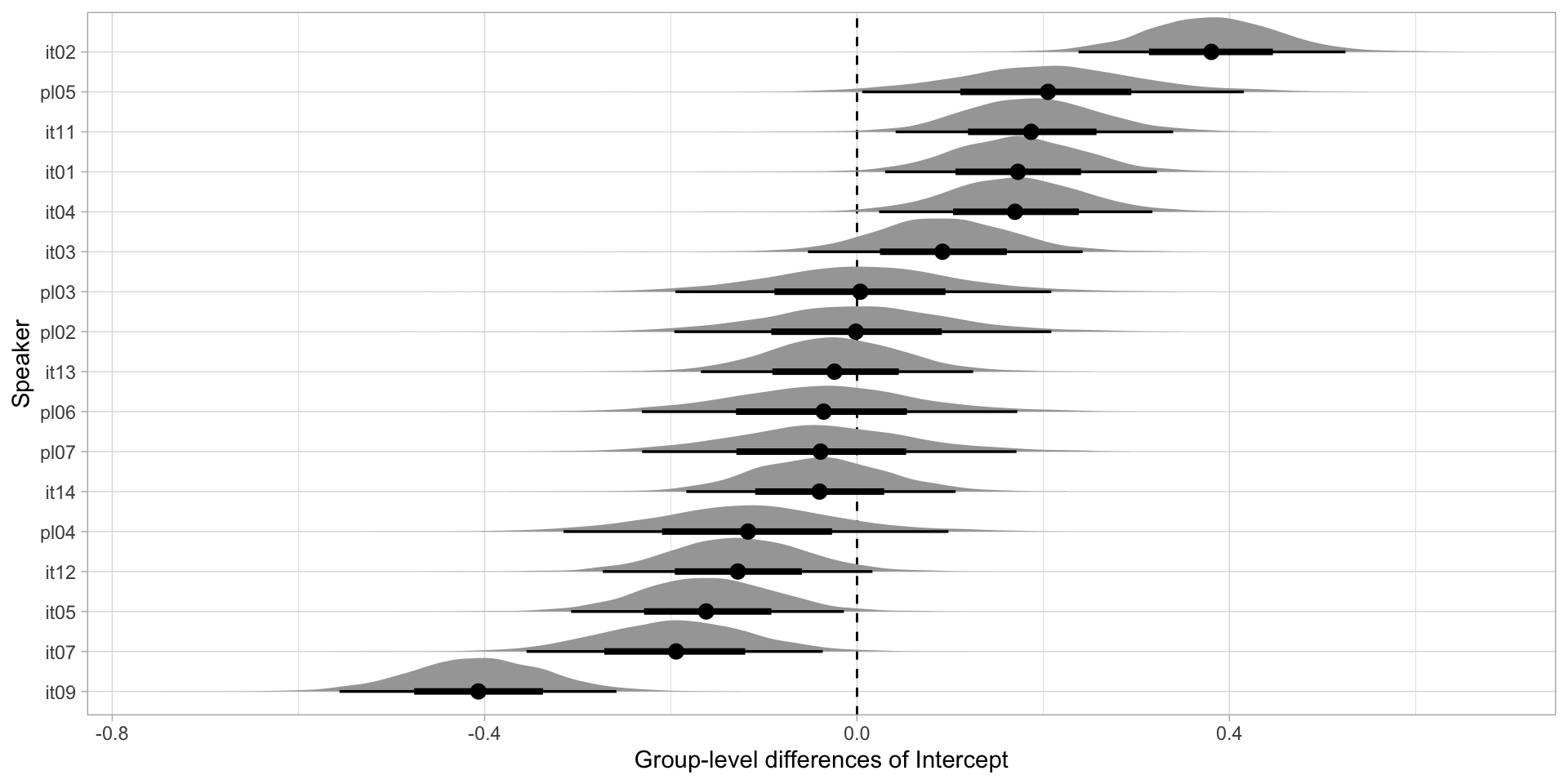

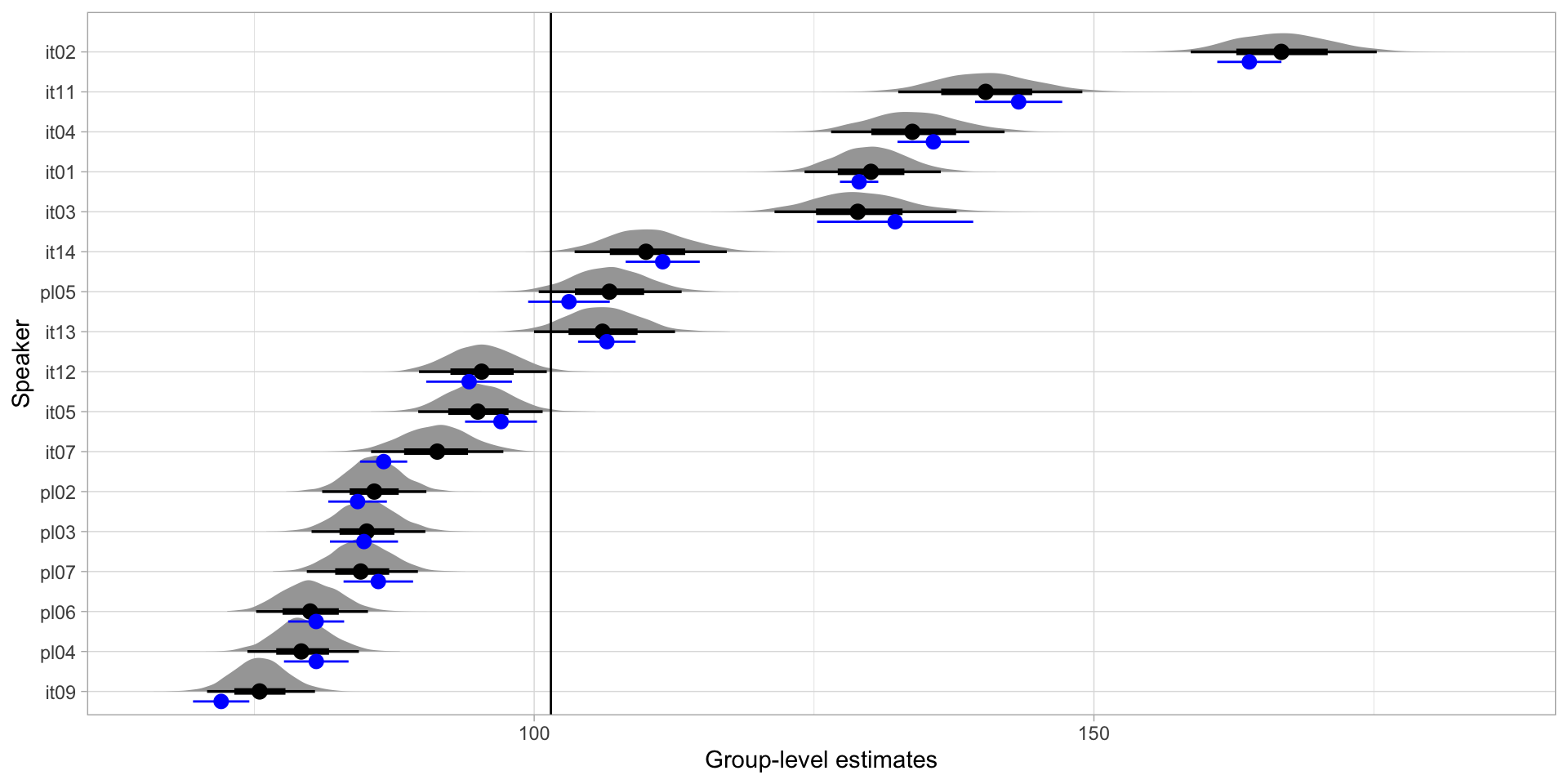

Group-level differences: Intercept

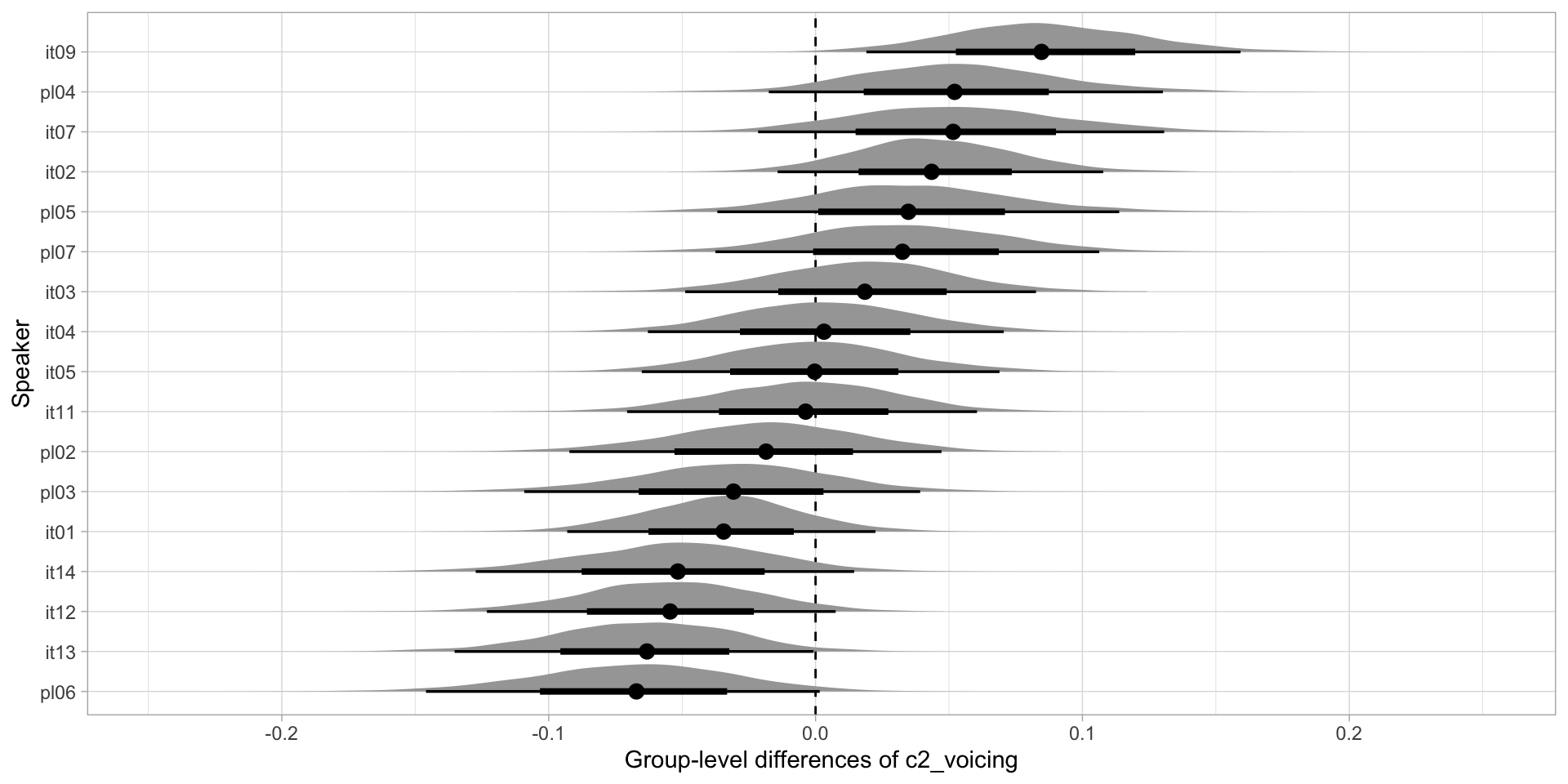

Voicing effect by speaker

Group-level intercepts and slopes

Group-level slopes must be variables that are within-grouping:

c2_voicingandvowelare within-speaker so they can and should be included as group-level slopes.languageis between-speaker (each speaker is a speaker of either Italian or Polish).

Whether a variable is within- or between-grouping depends on the study design although some variables are most likely between-grouping (gender, socio-economic status, education…).

Group-level differences: Intercept

Group-level differences: c2_voicing

Conditional predictions of vowel duration

Posterior probability of voicing effect

Partial pooling and shrinkage

From a statistical point of view, group-level effects have the following characteristics:

The are estimated through partial pooling.

- All estimates affect each other.

Partial pooling causes shrinkage.

- The group-level estimates are shrunk towards the population-level effect.

Shrinkage

Summary

Group-level terms allow the model to account for grouping in the data not accounted for by the population-level terms.

It is important to include group-level terms. Failure to do so will lead to over-confident estimates that won’t replicate.

Both group-level intercept and slopes should be included (including interactions!).